Funny pun!

If you’ve ever set up any computing device, up to and including a smartphone, you’ve done configuration management. If you’re not a system administrator, chances are you’re doing it really old school style. You know what you want the computer to look like and do, and you know pretty much how to get it there, so you just sit down and install programs and change settings and so forth until the computer looks right. Possibly you find out that you missed something the next day, but that’s easy enough to fix.

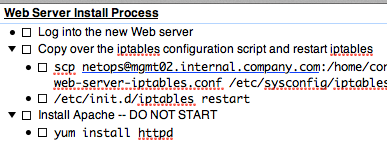

At AltaVista, we had this awesome configuration system written in perl. We copied a script over to any new server, and added the new server to a text file back on the main configuration server, and ran the script. It copied a bunch of files over to the new server, some of which were shell scripts. I seem to recall it’d then run the scripts. (Yeah, I know.) You could define individual packages and specify which servers got which packages; you could also update individual packages and the updates would likewise get pushed out. It was fairly clunky. On the other hand, conceptually it wasn’t that far from what we’re doing these days.

Fast forward 10 years. There are two very popular open source UNIX-oriented configuration tools, Puppet and Chef. (Both of these have recently added Windows support, but I haven’t used either of them to manage Windows machines.) There are also a ton of other choices if you don’t like Ruby, the language which both of them use. I’d also be lax if I didn’t mention that the original configuration management system, CFEngine, is still going strong. However, Chef and Puppet both have a lot of momentum: it’s easier to find people who’ve used them, they’ve both got good commercial support, and so on.

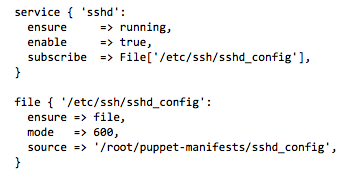

The core idea behind any modern configuration management system is the ability to describe the desired state for a computer in one central location, which then propagates out to individual systems. The key concept there is that you’re describing the desired state, not the steps necessary to get there. In other words, rather than writing a script that runs the commands required to install a Web server, you’d specify that you wanted the Web server package installed. Likewise, instead of writing the command to start your game server, you’d specify that you want the game server software to be running at all times. Puppet and Chef both allow you to control a wide range of states including software package installs, which services should be running, user accounts, and so on.

Assuming the configuration management software is well written, your configuration specifications are idempotent. That means you can apply the configuration to a given server as many times as you want without screwing anything up. For the Web server installation example, imagine that we were recompiling or reinstalling the Web server software each time the configuration management system checked for updates. It might not hurt anything, but it’d be extra unnecessary work.

Your script could certainly start out by checking to see if the Web server was already installed, in which case your script would also be idempotent. But you’d have to do that for every script you wrote. If you use a configuration management system, you’re getting the benefit of that check without having to recreate the wheel for every single element of the system.

So that’s the importance of abstraction. The other big thing you get out of using Puppet or Chef is modularity. Let’s say I have two modules that set up the basic networking for my two data centers. I can easily set up my configuration servers such that any server in my New York data center automatically has the New York module applied and any server in my San Mateo data center has that module applied. I don’t have to assign each server to one of the data centers by hand; the configuration server just needs to know that any server within a given IP address range belongs to New York, and so on.

You can also differentiate by a ton of other criteria. When a computer connects to the configuration server, it reports a bunch of information: its name, its physical characteristics (memory, CPU, etc.), what operating system it’s running — all that jazz. If my forum Web servers are all named something like forum-web-01.internal.company.com, and my billing Web servers are all named something like billing-web-01.internal.company.com, my configuration system can apply the basic Web package to both classes of server while applying the specific tweaks for forum servers to those servers only. Super-powerful stuff.

Now, I mentioned that coders will recognize that all this is basically an abstract API. The not-so-secret implication is that a system administrator writing these configurations is in fact a developer. Puppet uses its own language, but Chef configuration files are actually pure Ruby, so if you’re working with Chef you don’t have any excuse for pretending you’re not using a programming language just like those guys down the hallway who keep complaining about Visual Studio quirks.

Configuration is code.