At my last job, a product manager asked me if I had any reading recommendations for someone who wanted to learn more about what an SRE (Site Reliability Engineer) does. I wrote one up; here it is.

Category: IT At Large

Big ol’ 17 MB PDF file available right here.

My GDC presentation, lavishly titled “Devops: Bringing Development and Operations Together for Better Everything”, will be was on Tuesday, 10/9 at 4:50 PM. This post is a placeholder for comments and links to resources.

Your comments, positive or negative, are vastly appreciated.

Resources

Books

- Web Operations: Keeping the Data On Time

(Allspaw and Robbins)

- The Visible Ops Handbook: Implementing ITIL in 4 Practical and Auditable Steps

(Beher, Kim, and Spafford)

Blogs

- Planet Devops (aggregator)

- High Scalability (scaling topics)

- Code as Craft (Etsy DevOps blog)

Mailing Lists

Events

- devopsdays: worldwide, sessions recorded & freely viewable

- Surge: Baltimore, run by OmniTI, sessions recorded & freely viewable

- Velocity: Santa Clara, Europe, China

Topics

- Continuous integration (Martin Fowler — his site has great stuff on continuous delivery, too)

I have been pretty busy with travel lately, but it turns out to be sort of reasonable to write on an iPad while flying. This post is brought to you by JetBlue. Let’s talk about a few best engineering practices as they apply to technical operations.

1: Code review is a good idea. This is a new practice for many sysadmins, but most of us get the idea that you want someone else to eyeball a maintenance change before you make it live. Sell it that way. If your engineering department has code review software in place, you might as well give it a try. Chances are it’s tied into the source control system you’re both using, and automation reduces friction.

As a manager, the best thing I can do to help people get comfortable with formal code review is to reduce the friction. I want code reviewed as quickly as possible. This means being ready to do it myself if necessary, although you don’t want people dependent on you. So complain in your daily standups when other people are falling down on the job.

Oh, and get programmers involved too. You want them interested in Puppet and init scripts. You also want to be at least an observer on their code reviews, and it’s easier to get that if you invite them into your house first.

2: Integrate continuously. When you change something about a machine build, test that change in a local VM. (Take a look at Vagrant for one good tool that helps a lot with that.) After you check your changes in, the build system should do an automated test.

I personally like to have two different development environments in the build system. One is the usual engineering development environment; the other is the technical operations environment. You could integrate ops changes side by side with engineering changes, but I’m still a bit leery of that. Especially in the early days, you’ll wind up breaking the test systems a lot. The natural developer reaction to that is annoyance. I’d rather avoid that pain point and give ops a clean test bed; the tested results can integrate into the main flow daily or as necessary.

I may change my mind on this eventually. The counter-argument is the same case I’ve been making all along: configuration is code. Why treat it any differently than the rest of the codebase? That’s a pretty compelling argument and I think the perfect devops group would do it that way. I just don’t want to drive anyone insane on the way there.

3: Hire coders. I am pretty much done hiring system administrators who can’t write code in some language. I don’t care if it’s perl or ruby or python or whatever. I probably don’t want someone who only knows bash, but I could be convinced if they’re a really studly bash programmer.

I want to have a common language for the entire group; it’s kind of messy if half the routine scripts are written in perl and half are written in ruby. If nothing else, you’re cutting back on the number of people who can debug your problems. Because tech ops people like variety, it’s almost certain that we’ll be using a bunch of scripting languages. If you have Puppet and Splunk installed, you’re stuck with ruby (for Puppet) and python (for Splunk) and you’re going to need to know how to code both. Still, you can at least make your own scripts predictable.

I can’t dig up any video of this, but Adam Jacobs has a great spiel about how you’re probably a programming polyglot even if you don’t know it. If you speak perl, you’ll be able to puzzle your way through enough python to write a quick Splunk script. When hiring, you want to make sure your candidate has reasonably good understanding of one of the common scripting languages. It doesn’t matter so much if it’s the department standard.

Your technical operations people will be writing code, though. There’s a lot of complexity in automation. That complexity is abstracted so you can deal with it on a day to day basis, and the process of abstraction is writing code. So hire coders.

The one exception is entry-level NOC people, and not every group has those. If you do have a 24/7 NOC, those guys may not be coders. However, it is mandatory to give those employees training programs which include coding. It’s hard enough maintaining camaraderie between off-hours employees and the day shift without having knowledge barriers.

Big sympathies for my peers and compatriots over at Bioware Austin today. The live SW:TOR servers are down for the next seven hours or so after a deployment issue resulted in old data pushed live. Since I’m not there, I can’t make any kind of informed guess as to exactly how this happened. They’ve said they need to rebuild assets; the time span for this fix is the combination of however long it takes to do the rebuild and however long it takes to push the new assets out to all their servers. Both of these are potentially time-consuming. Eight hours makes me think they need to rebuild a large chunk of the data from scratch.

In general, I recommend implementing a checksum into your push process and server startup scripts. Before you fire up a server binary, you should run a checksum (maybe Adler-32) on your binaries and your data files, and make sure they match what’s expected for that build. If they don’t match, throw an error and don’t run the binary.

There are potential speed issues here if your data is large. You can speed up the process by calculating the checksum for a smaller portion of each file, or you could be a little more daring and just compare file names and sizes. Bear in mind that you’re trying to compensate for failures of the release system here, though, so you wouldn’t want to rely only on simple checks.

Also, if you’re looking at checksums which were also generated by the build system, you need to account for the possibility that those checksums are wrong. Single points of failure can occur in software as well as hardware.

We’ve established that it’s way simple to manage configurations across a large number of servers. This is great for operating data centers. It’s also great for code promotion: by using configuration management consistently throughout your server environments, you reduce the chances of problems when pushing server code and data live.

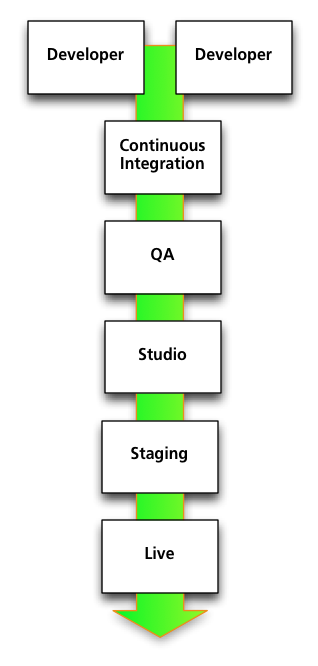

Here’s a generic simplified code promotion path. There are a bunch of developers coding away on their workstations or individual development servers. They check their changes into source control. Every time a change is checked in, the server gets rebuilt and a series of automated tests get run. If the build compiles successfully and passes the tests, it’s automatically pushed to the Continuous Integration environment.

Here’s a generic simplified code promotion path. There are a bunch of developers coding away on their workstations or individual development servers. They check their changes into source control. Every time a change is checked in, the server gets rebuilt and a series of automated tests get run. If the build compiles successfully and passes the tests, it’s automatically pushed to the Continuous Integration environment.

From there, the build is pushed to QA. This is probably not triggered automatically, since QA will want to say when they’re ready for a new push. Once it’s through QA, it goes to the Studio environment for internal playtests and evaluations, then to a Staging environment in the data center, and finally to Live.

In practice, there’s also a content development path that has to go hand in hand with this, and since content can crash a server just as well as code can, it needs the same testing. This can add complexity to the path in a way that’s out of scope for this post, but I wanted to acknowledge it.

Back to configuration. Each one of these environments is made up of a number of servers. Those servers need to be configured. As may or may not be obvious, those configurations are in flux during development. They won’t see as much change as the code base, but we definitely want to tune them, modify them, and fix bugs in them. We do not want to try to replicate all the changes we’ve made to the various development environments by hand in the data center, even if we’re using a configuration management system at the live level. The goal is to reduce the number of manual changes that need to be made.

You can’t use the exact same configurations in each environment since underlying details like the number of servers in a cluster, the networking, and so on will change. Fortunately Chef and Puppet both support environments. This means you can use the same set of configuration files throughout your promotion path. You’ll specify common elements once, reducing the chances of fumbling fingers. Configurations that need to change can be isolated on a per-environment basis.

Using Puppet as our example, since I’m more familiar with it, specifying which version of the game server you want gets reduced down to something as simple as this:

$gameserver_package = "mmo_game_server.${environment}"

package { $gameserver_package: ensure => installed, }

The $environment variable is set in the puppet configuration file on each server. Make sure your build system is naming packages appropriately — which is a one-time task — and you control server promotion by pressing the right buttons in the build system with no configuration file edits needed at all.

Funny pun!

If you’ve ever set up any computing device, up to and including a smartphone, you’ve done configuration management. If you’re not a system administrator, chances are you’re doing it really old school style. You know what you want the computer to look like and do, and you know pretty much how to get it there, so you just sit down and install programs and change settings and so forth until the computer looks right. Possibly you find out that you missed something the next day, but that’s easy enough to fix.

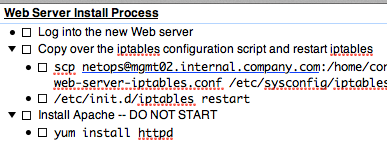

At AltaVista, we had this awesome configuration system written in perl. We copied a script over to any new server, and added the new server to a text file back on the main configuration server, and ran the script. It copied a bunch of files over to the new server, some of which were shell scripts. I seem to recall it’d then run the scripts. (Yeah, I know.) You could define individual packages and specify which servers got which packages; you could also update individual packages and the updates would likewise get pushed out. It was fairly clunky. On the other hand, conceptually it wasn’t that far from what we’re doing these days.

Fast forward 10 years. There are two very popular open source UNIX-oriented configuration tools, Puppet and Chef. (Both of these have recently added Windows support, but I haven’t used either of them to manage Windows machines.) There are also a ton of other choices if you don’t like Ruby, the language which both of them use. I’d also be lax if I didn’t mention that the original configuration management system, CFEngine, is still going strong. However, Chef and Puppet both have a lot of momentum: it’s easier to find people who’ve used them, they’ve both got good commercial support, and so on.

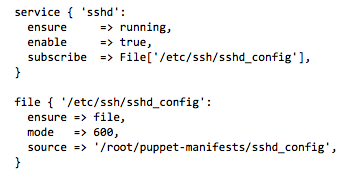

The core idea behind any modern configuration management system is the ability to describe the desired state for a computer in one central location, which then propagates out to individual systems. The key concept there is that you’re describing the desired state, not the steps necessary to get there. In other words, rather than writing a script that runs the commands required to install a Web server, you’d specify that you wanted the Web server package installed. Likewise, instead of writing the command to start your game server, you’d specify that you want the game server software to be running at all times. Puppet and Chef both allow you to control a wide range of states including software package installs, which services should be running, user accounts, and so on.

Assuming the configuration management software is well written, your configuration specifications are idempotent. That means you can apply the configuration to a given server as many times as you want without screwing anything up. For the Web server installation example, imagine that we were recompiling or reinstalling the Web server software each time the configuration management system checked for updates. It might not hurt anything, but it’d be extra unnecessary work.

Your script could certainly start out by checking to see if the Web server was already installed, in which case your script would also be idempotent. But you’d have to do that for every script you wrote. If you use a configuration management system, you’re getting the benefit of that check without having to recreate the wheel for every single element of the system.

So that’s the importance of abstraction. The other big thing you get out of using Puppet or Chef is modularity. Let’s say I have two modules that set up the basic networking for my two data centers. I can easily set up my configuration servers such that any server in my New York data center automatically has the New York module applied and any server in my San Mateo data center has that module applied. I don’t have to assign each server to one of the data centers by hand; the configuration server just needs to know that any server within a given IP address range belongs to New York, and so on.

You can also differentiate by a ton of other criteria. When a computer connects to the configuration server, it reports a bunch of information: its name, its physical characteristics (memory, CPU, etc.), what operating system it’s running — all that jazz. If my forum Web servers are all named something like forum-web-01.internal.company.com, and my billing Web servers are all named something like billing-web-01.internal.company.com, my configuration system can apply the basic Web package to both classes of server while applying the specific tweaks for forum servers to those servers only. Super-powerful stuff.

Now, I mentioned that coders will recognize that all this is basically an abstract API. The not-so-secret implication is that a system administrator writing these configurations is in fact a developer. Puppet uses its own language, but Chef configuration files are actually pure Ruby, so if you’re working with Chef you don’t have any excuse for pretending you’re not using a programming language just like those guys down the hallway who keep complaining about Visual Studio quirks.

Configuration is code.

I’d been leaning towards OpenStack for my private clouds; it’s not entirely production-ready yet, but with Dell, HP, and Rackspace behind it I had high hopes. But Citrix just fully open-sourced CloudStack. As Lydia Leong notes, this changes the calculus a bit.

She mentions HP’s cloud efforts, but I’m going to be watching Dell. I think Rob Hirschfeld’s Crowbar project is going to hit the sweet spot for people who want to deploy private clouds. They’re deeply involved in the OpenStack community; still, stability is attractive.

And while I’m reading Lydia’s blog, she’s right about the Eucalyptus/EC2 API license. You don’t see anyone claiming that Google+’s API (whenever it shows up) needs to be compatible with Facebook’s API in order to succeed.

Devops was a concept on the horizon two years ago. Since then I’ve had the chance to dig into it, figure out what I didn’t understand then, and implement an agile devops focused work environment. In the interests of demystifying what I do, I’m going to lay out what all those buzzwords currently mean to me. Spoiler: I still don’t think continuous deployment all the way to live makes sense for MMOs. Social games may be another matter.

First Principle

Devops is the principle of applying software development methodologies to the discipline of system administration and vice versa. There are couple of reasons to do this. First, the two fields are closely related and they have a lot to learn from one another. At the end of the day you’re giving directions to computers and hoping you said it right. If developers have learned some cool tricks for double-checking your hopes before you subject them to a live datacenter, bonus. If system administrators have learned some stuff about testing code in the same live datacenter and we can pull those tests forward in the dev process, double bonus.

Second, there’s a tendency for groups using the same techniques to talk more. Anything that gets groups to talk more is a good thing. I have a grand plan to invent marketops sometime in the next couple of years just so I can get marketing and operations groups to talk more. Mardevops comes next. Don’t think I’m not serious.

OK, so we have a principle and some reasons why it’s a good idea. Let’s break that down a bit further and spend a little time on the techniques we can use to realize that principle.

Configuration is Code

The buzzphrase is “infrastructure is code.” This is a lie and I actually think it’s a bit damaging. Over the last five years, virtualization has made it very easy to think of computers as non-physical objects that can be completely reconfigured remotely. Modern server hardware technology helps; if your servers are blades, the blade chassis containing them probably manages a lot of the individual blade configuration, and any blade you plug into a given slot in the chassis will behave according to how you’ve configured that slot.

However, you’re still dealing with cables and heat profiles and power requirements. Infrastructure involves the physical. Even if we’re using a cloud, it’s critical to think about the underlying hardware in order to better understand how to provide redundancy and resilience. Consider the April 2011 Amazon EC2 outage.

Configuration is code, though. It’s just instructions for computers. Therefore, it benefits from being treated like code.

The easy implication is that we should be storing our configuration files in source control. Everyone sane does that already, so that was pretty easy. We should also be acting like we’re coders. This is harder. But do it: use code review on every configuration checkin, even if it’s just someone else eyeballing it.

Taking a step back, configuration should undergo the same kind of promotion process you’d expect from any development team. You want to be performing continuous builds. For operations, this means that every time someone modifies a configuration file, that file gets pushed into a test environment and an automated process makes sure that the device being configured still runs.

Scrum, Sometimes

Every game company I’ve worked for has used some form of Scrum methodology for development. Online game operations groups should get on the bandwagon. Carefully.

If you try to apply Scrum to everything you’ll do in operations, you’ll find out pretty quickly that Scrum is designed for projects and you do a lot of maintenance work. Don’t try and use Scrum there. If something breaks, do not write a user story about how “As a user, I would like gameserver01.bos.gamecompany.com to be functional.”

Instead, treat it as a bug. Use your existing bug system if possible. This is convenient because it’s pretty easy to explain priority A bugs to your producers, and they’ll understand why it’s affecting your velocity. This also subtly implies that QA should be involved in verifying the fixes. That’s not an accident.

Also, identify tasks that can’t be decomposed into sprint-sized chunks and think about applying Kanban techniques there. Clinton Keith threw out Kanban as the appropriate agile operations technique in a seminar I took once. It’s not the be all and end all — that’s a misapprehension caused by not enough communication between development and operations — but it’s not bad if you’re doing a lot of repetitive tasks.

Finally, cross-fertilize your scrums. I don’t think we really want an operations scrum; rather, we want a datacenter scrum, which is primarily focused on operational tasks. We want a security scrum, which needs both operational security guys and developers. We want operations people sitting on most if not all engineering-oriented scrums. Scrum is a great fit for devops because it’s oriented towards projects, not functional groups.

Testing

You don’t want developers writing code without QA involved. The same applies to system administrators.

Load testing, security monitoring, and functionality monitoring are part of good data center infrastructure. They should be part of the development and build process as well.

Squish those two together and you wind up wanting to do full stack testing as early on as possible. Testing in a simulation of a live environment — here’s where cloud technologies come in — is really useful. This makes QA involvement a natural part of the process; you want to bring the traditional code branch into an integration environment with the ops configuration branch as soon as possible. The idea is to find out if your wacky configuration ideas break the carefully crafted software earlier rather than later.

Since you’re filing infrastructure issues as bugs, you already need QA to understand how to find and report bugs in your stuff, so that all works out rather well.

Next Up

I want to dig further into each of those techniques, and I also want to talk a bit about how you can get from zero to devops. I’ll probably do the latter next. Thanks for reading, particularly since it’s been a long long while.

Attention conservation notice: this doesn’t have much to do with MMOs.

The retail cost of a JavaStation-10 was around $750, if I recall correctly. It was a great concept, because it was a great task station. You could do email, calendaring, and Web browsing. The OS was slower than you’d like, but I found it perfectly reasonable unless you were asking it to do X-Windows via Citrix or something like that. Of course, you had to have a big chunky server in the data center to serve up the OS at boot time, and the hardware didn’t include the mythical JavaChip co-processor, so complex apps could be a bit poky.

A decade later, I have an iPad sitting on my desk. It ran me around $750, but I could have gotten one at two-thirds the price. It does email, calendaring, and Web browsing, plus a ton more. The OS is fast. This would make a really good task station, especially if you housed it in a different casing and slapped on a keyboard. I have no reason to think that Apple is aiming in that direction, but I won’t be surprised if we see iOS devices designed for single purpose workstations.

Those early ideas just get better and better as the technology catches up, huh?

In a vain attempt to tie this back to the blog purpose: this thing is going to be an amazing admin tool. If I’m in the datacenter, it’ll be very useful to have a separate screen handy. I can’t count the number of times I’ve been looking at a server on a KVM and I’ve wanted to have a second monitor available for watching logs and so forth. I have an ssh client, and I have a client that does both RDP and VNC, which is key for a multi-platform admin. If I was still working in Silicon Valley back in the stupid money days, I’d try to sell my boss on buying these for my team as core tools. Cheaper than an on-call laptop.