At my last job, a product manager asked me if I had any reading recommendations for someone who wanted to learn more about what an SRE (Site Reliability Engineer) does. I wrote one up; here it is.

Big ol’ 17 MB PDF file available right here.

These are pretty rough notes from the single tech ops oriented talk I got to at GDC Online. Hao Chen and Jesse Willett did a great job filling in at what looked like the last minute — the talk was originally supposed to be on Draw Something, but it pivoted to Farmville 2 and Chefville. It’s always interesting to hear perspective from a big datacenter like Zynga.

My commentary is in footnotes because it’s been too long since I used my nifty footnote plugin.

My GDC presentation, lavishly titled “Devops: Bringing Development and Operations Together for Better Everything”, will be was on Tuesday, 10/9 at 4:50 PM. This post is a placeholder for comments and links to resources.

Your comments, positive or negative, are vastly appreciated.

Resources

Books

- Web Operations: Keeping the Data On Time

(Allspaw and Robbins)

- The Visible Ops Handbook: Implementing ITIL in 4 Practical and Auditable Steps

(Beher, Kim, and Spafford)

Blogs

- Planet Devops (aggregator)

- High Scalability (scaling topics)

- Code as Craft (Etsy DevOps blog)

Mailing Lists

Events

- devopsdays: worldwide, sessions recorded & freely viewable

- Surge: Baltimore, run by OmniTI, sessions recorded & freely viewable

- Velocity: Santa Clara, Europe, China

Topics

- Continuous integration (Martin Fowler — his site has great stuff on continuous delivery, too)

I’m very pleased to let y’all know that I’ll be presenting at GDC Online in Austin this October. My session is “Devops: Bringing Development and Operations Together for Better Everything” and it’ll be, you know, the kind of things I talk about. The target audience is anyone in game development who wants engineering and technical operations to work better together. I’m really psyched. Also really nervous, but that’ll pass once I actually deliver the talk.

Big cheers to my old team and friends at ZeniMax Online Studios, who got to announce Elder Scrolls Online yesterday. Note: announcement Web site living in the cloud. Very nice.

I have been pretty busy with travel lately, but it turns out to be sort of reasonable to write on an iPad while flying. This post is brought to you by JetBlue. Let’s talk about a few best engineering practices as they apply to technical operations.

1: Code review is a good idea. This is a new practice for many sysadmins, but most of us get the idea that you want someone else to eyeball a maintenance change before you make it live. Sell it that way. If your engineering department has code review software in place, you might as well give it a try. Chances are it’s tied into the source control system you’re both using, and automation reduces friction.

As a manager, the best thing I can do to help people get comfortable with formal code review is to reduce the friction. I want code reviewed as quickly as possible. This means being ready to do it myself if necessary, although you don’t want people dependent on you. So complain in your daily standups when other people are falling down on the job.

Oh, and get programmers involved too. You want them interested in Puppet and init scripts. You also want to be at least an observer on their code reviews, and it’s easier to get that if you invite them into your house first.

2: Integrate continuously. When you change something about a machine build, test that change in a local VM. (Take a look at Vagrant for one good tool that helps a lot with that.) After you check your changes in, the build system should do an automated test.

I personally like to have two different development environments in the build system. One is the usual engineering development environment; the other is the technical operations environment. You could integrate ops changes side by side with engineering changes, but I’m still a bit leery of that. Especially in the early days, you’ll wind up breaking the test systems a lot. The natural developer reaction to that is annoyance. I’d rather avoid that pain point and give ops a clean test bed; the tested results can integrate into the main flow daily or as necessary.

I may change my mind on this eventually. The counter-argument is the same case I’ve been making all along: configuration is code. Why treat it any differently than the rest of the codebase? That’s a pretty compelling argument and I think the perfect devops group would do it that way. I just don’t want to drive anyone insane on the way there.

3: Hire coders. I am pretty much done hiring system administrators who can’t write code in some language. I don’t care if it’s perl or ruby or python or whatever. I probably don’t want someone who only knows bash, but I could be convinced if they’re a really studly bash programmer.

I want to have a common language for the entire group; it’s kind of messy if half the routine scripts are written in perl and half are written in ruby. If nothing else, you’re cutting back on the number of people who can debug your problems. Because tech ops people like variety, it’s almost certain that we’ll be using a bunch of scripting languages. If you have Puppet and Splunk installed, you’re stuck with ruby (for Puppet) and python (for Splunk) and you’re going to need to know how to code both. Still, you can at least make your own scripts predictable.

I can’t dig up any video of this, but Adam Jacobs has a great spiel about how you’re probably a programming polyglot even if you don’t know it. If you speak perl, you’ll be able to puzzle your way through enough python to write a quick Splunk script. When hiring, you want to make sure your candidate has reasonably good understanding of one of the common scripting languages. It doesn’t matter so much if it’s the department standard.

Your technical operations people will be writing code, though. There’s a lot of complexity in automation. That complexity is abstracted so you can deal with it on a day to day basis, and the process of abstraction is writing code. So hire coders.

The one exception is entry-level NOC people, and not every group has those. If you do have a 24/7 NOC, those guys may not be coders. However, it is mandatory to give those employees training programs which include coding. It’s hard enough maintaining camaraderie between off-hours employees and the day shift without having knowledge barriers.

One of the fun things about interviewing is the times you wind up geeking out about tech or business with a simpatico interviewer. I have been talking to a good number of such people this week, and one of the conversations I keep having is the one about using virtual servers in the cloud to handle MMO launch loads. This is a really good strategy for anything Web-based. Zynga used to launch and host new games on Amazon’s EC2, although they’ve pulled back on EC2 usage because it’s cheaper to run enough servers for baseline usage in their own data centers. There are, likewise, a ton of Web startups using EC2 or any of the other public clouds to minimize capital expenditure going into the launch of a new product.

Not surprisingly, people tend to wonder about using the same strategy for an MMO. Launch week for any MMO is a high demand time, so why not push the load into the cloud instead of buying too much hardware or stressing out the servers?

It’s an uptime problem. I don’t worry too much about the big outages — those are fluke occurrences and your datacenter’s network could have a problem of similar severity on your launch day. I do worry about the general uptime.

Web services are non-persistent. (Mostly. Yes, SPDY changes that.) A browser makes an HTTP request, and then can safely give up on the TCP connection to the Web server. State doesn’t live on individual Web servers, so if Web server one dies before the next HTTP request, you just feed the next request to Web server two and everyone’s happy.

MMO servers are persistent. (Mostly. I’m sure there are exceptions, which I would love to hear about.) The state of a given instance or zone is typically maintained on an instance/zone server. If that server dies, most likely the people in that instance are either kicked off the game or booted back to a different area. This is lousy MMO customer experience.

So that’s why I don’t think in terms of cloud for MMO launches. That said, I don’t think these are inherent problems with the concepts. Clouds can be more stable, and Amazon’s certainly working in that direction. For that matter, there are other cloud providers. Likewise, I can sketch out a couple of ways to avoid the persistence problem. I’m not entirely sure they’d work, but there are possibilities. You can at the least minimize the bad effects of random server crashes.

Still and all, it’s a lot trickier than launching anything Web-related in a public cloud.

Big sympathies for my peers and compatriots over at Bioware Austin today. The live SW:TOR servers are down for the next seven hours or so after a deployment issue resulted in old data pushed live. Since I’m not there, I can’t make any kind of informed guess as to exactly how this happened. They’ve said they need to rebuild assets; the time span for this fix is the combination of however long it takes to do the rebuild and however long it takes to push the new assets out to all their servers. Both of these are potentially time-consuming. Eight hours makes me think they need to rebuild a large chunk of the data from scratch.

In general, I recommend implementing a checksum into your push process and server startup scripts. Before you fire up a server binary, you should run a checksum (maybe Adler-32) on your binaries and your data files, and make sure they match what’s expected for that build. If they don’t match, throw an error and don’t run the binary.

There are potential speed issues here if your data is large. You can speed up the process by calculating the checksum for a smaller portion of each file, or you could be a little more daring and just compare file names and sizes. Bear in mind that you’re trying to compensate for failures of the release system here, though, so you wouldn’t want to rely only on simple checks.

Also, if you’re looking at checksums which were also generated by the build system, you need to account for the possibility that those checksums are wrong. Single points of failure can occur in software as well as hardware.

We’ve established that it’s way simple to manage configurations across a large number of servers. This is great for operating data centers. It’s also great for code promotion: by using configuration management consistently throughout your server environments, you reduce the chances of problems when pushing server code and data live.

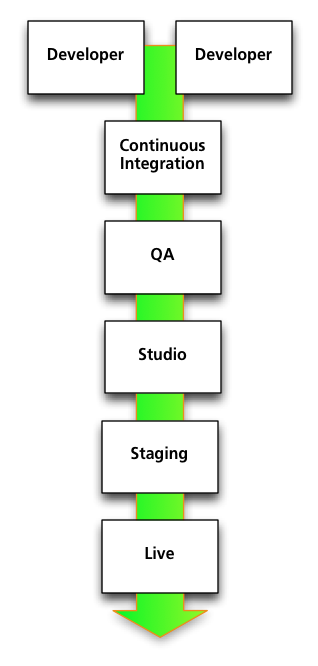

Here’s a generic simplified code promotion path. There are a bunch of developers coding away on their workstations or individual development servers. They check their changes into source control. Every time a change is checked in, the server gets rebuilt and a series of automated tests get run. If the build compiles successfully and passes the tests, it’s automatically pushed to the Continuous Integration environment.

Here’s a generic simplified code promotion path. There are a bunch of developers coding away on their workstations or individual development servers. They check their changes into source control. Every time a change is checked in, the server gets rebuilt and a series of automated tests get run. If the build compiles successfully and passes the tests, it’s automatically pushed to the Continuous Integration environment.

From there, the build is pushed to QA. This is probably not triggered automatically, since QA will want to say when they’re ready for a new push. Once it’s through QA, it goes to the Studio environment for internal playtests and evaluations, then to a Staging environment in the data center, and finally to Live.

In practice, there’s also a content development path that has to go hand in hand with this, and since content can crash a server just as well as code can, it needs the same testing. This can add complexity to the path in a way that’s out of scope for this post, but I wanted to acknowledge it.

Back to configuration. Each one of these environments is made up of a number of servers. Those servers need to be configured. As may or may not be obvious, those configurations are in flux during development. They won’t see as much change as the code base, but we definitely want to tune them, modify them, and fix bugs in them. We do not want to try to replicate all the changes we’ve made to the various development environments by hand in the data center, even if we’re using a configuration management system at the live level. The goal is to reduce the number of manual changes that need to be made.

You can’t use the exact same configurations in each environment since underlying details like the number of servers in a cluster, the networking, and so on will change. Fortunately Chef and Puppet both support environments. This means you can use the same set of configuration files throughout your promotion path. You’ll specify common elements once, reducing the chances of fumbling fingers. Configurations that need to change can be isolated on a per-environment basis.

Using Puppet as our example, since I’m more familiar with it, specifying which version of the game server you want gets reduced down to something as simple as this:

$gameserver_package = "mmo_game_server.${environment}"

package { $gameserver_package: ensure => installed, }

The $environment variable is set in the puppet configuration file on each server. Make sure your build system is naming packages appropriately — which is a one-time task — and you control server promotion by pressing the right buttons in the build system with no configuration file edits needed at all.